@rjparker1 said:

@wesman said:

Just and an FYI, 99.9% of all hard drives on the market to day are MORE than fast enough, typically running in the 3+ gbps but you are almost ALWAYS going to be limited by your network 1gbps (110 MBps typically).Further, a 30GB bluray rip only needs ~4 to 5 MBps to Direct Play… So, speed (for Plex) is not an issue… If Plex is the needed issue, then I would go with something Raided 0/1/5/10 for redundancy issues. Your biggest concern is protecting the data from drive failures, not how fast the drive is.

WRONG. WRONG WRONG. Network is NOT weakest link… hard drives are… PERIOD. That is incorrect info. Network will NEVER be the bottleneck… apps, drives, OS, will ALL interfere LONG before you run out of network bandwidth I will GUARANTEE you that.

You really should educate yourself on TCP/IP, the protocols, the real world speeds, etc before saying such foolish stuff. With a single 1GB network card I can saturate it all day long with my Plex server. I was doing this so often I upgraded my networking a few years back.

And 1GB is 112 MB (big ‘B’) bandwidth… blu-ray needs about ~16 Mbps (little ‘b’) 4 to 5 is more for standard DVD movies.

You could stream roughly 80 blu-ray videos over the SAME network simultaneous on a GIG network. Plex, the OS and the drive could not withstand that much activity… but the network surely can…

Let’s take Juno as an example because the math is easy. 40/8=5MB. Assuming a perfect network world with absolutely no hickups, no overhead, perfect drivers, etc you would have 125MB of bandwidth. So purely in theory you could stream 25 of them. Real world it’s closer to 20 if lucky.

That’s conventional 1080p. How about UHD and similar? Do your movies and TV show magically appear on your server or do you have to upload them to the server? Do you run FTP or anything else on the server?

Can you watch a couple of Bluray videos and upload new media to your server at the same time?

As your Plex server grows in function it becomes very easy to saturate the network link while only using a portion of the IO of your drives (spindle or digital).

Of course I’m playing Devil’s Advocate here in this message and really pushing the limits but for many systems the NIC will be the first bottleneck that needs fixing to up your “pipe” size.

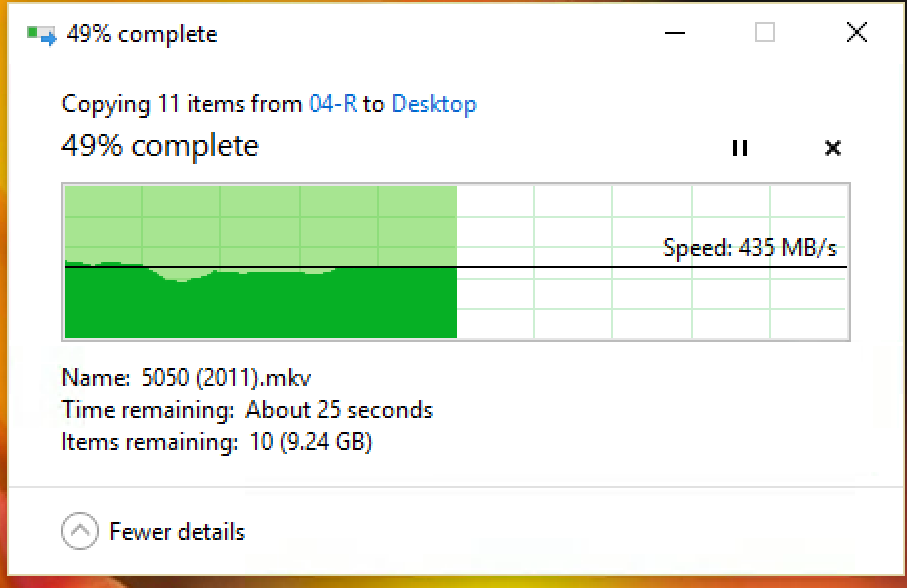

BTW, caching is the key to good disk IO performance. I can still saturate my 4GB network before I can the disk IO.