Server Version#: 1.19.3.2764-ef515a800

Player Version#: 8.1.0.17650 nVidia shield

Docker Image#: plexinc/pms-docker latest a0ffee54489d 10 days ago 484M

Host: Ubuntu 20.04 LTS 4 Core CPU, 6GB RAM

docker_inspect_plex.txt (14.8 KB)

Plex_crash_or_offline.zip (8.1 MB)

I recently migrated my plex server over to docker, putting the config/Application Data folder on a hard drive mount that would solely be used for Plex. Since migrating to docker, I have noticed that the service is constantly crashing, skipping, or having issues connecting. When I check docker logs --follow plex I see several DB issues:

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Sqlite3: Sleeping for 200ms to retry busy DB.

Connection to 184.105.148.97 closed by remote host.

Connection to 184.105.148.86 closed by remote host.

I was wondering what I can do to resolve this. I was hoping to use docker as a way to keep the system more clean, and have plex reside in it’s own space. Eventually moving this to k8s once everything is completed and working properly there.

Plex_crash_or_offline.zip contains my Plex log folder, of all data. Where docker_inspect_plex.txt contains just the data of the docker container itself. Not sure what is causing the slow IO issues, as spec wise, it should be fully capable of running. Originally, I had another host running on this same system with the exact same specs. I just made a new VM and switched everything to docker and all of it is local to that VM (no network shares for the app itself, there are network shares for the media however - this hasn’t changed since running plex on the host itself).

Would appreciate any insight, as if I restart the container, everything works like nothing happened. Plex always constantly recommends trying to make the connection run in an insecure fashion, but no matter if it is secure or insecure, it stll fails to load - due to the busy Database.

What tuning can be done here to either speed up the database read/writes, or something else to make this work smoothly?

Whatever you’ve done, it’s not working well.

Did you give it enough resources (CPU & Memory )?

The database keeps going busy / locking.

May 15, 2020 11:32:04.094 [0x7f3f35f44700] DEBUG - Completed: [192.168.2.1:63202] 200 GET /hubs/home/continueWatching?includeExternalMedia=1 (36 live) TLS GZIP Page 0-15 230ms 1470 bytes (pipelined: 2)

May 15, 2020 11:32:04.384 [0x7f3e977fe700] DEBUG - [com.plexapp.agents.none] Plug-in is starting, waiting 139 seconds for it to complete.

May 15, 2020 11:32:04.730 [0x7f3ec0ff9700] WARN - Waited one whole second for a busy database.

May 15, 2020 11:32:05.741 [0x7f3ec0ff9700] ERROR - Failed to begin transaction (../Library/MetadataItem.cpp:8430) (tries=1): Cannot begin transaction. database is locked

May 15, 2020 11:32:05.769 [0x7f3e977fe700] DEBUG - [com.plexapp.agents.none] Plug-in is starting, waiting 138 seconds for it to complete.

May 15, 2020 11:32:07.187 [0x7f3ea67fc700] DEBUG - Request: [127.0.0.1:52590 (Loopback)] GET /identity (37 live) Signed-in

May 15, 2020 11:32:07.187 [0x7f3f35f44700] DEBUG - Completed: [127.0.0.1:52590] 200 GET /identity (37 live) 0ms 398 bytes (pipelined: 1)

May 15, 2020 11:32:07.208 [0x7f3ea3ff7700] DEBUG - Request: [127.0.0.1:52594 (Loopback)] GET /library/changestamp (37 live) GZIP Signed-in Token (savantali)

May 15, 2020 11:32:07.208 [0x7f3f35f44700] DEBUG - Completed: [127.0.0.1:52594] 200 GET /library/changestamp (37 live) GZIP 0ms 478 bytes

May 15, 2020 11:32:07.209 [0x7f3ea2ff5700] DEBUG - Request: [127.0.0.1:52598 (Loopback)] GET /library/changestamp (37 live) GZIP Signed-in Token (savantali)

May 15, 2020 11:32:07.209 [0x7f3f35f44700] DEBUG - Completed: [127.0.0.1:52598] 200 GET /library/changestamp (37 live) GZIP 0ms 478 bytes

May 15, 2020 11:32:07.538 [0x7f3e977fe700] DEBUG - [com.plexapp.agents.none] Plug-in is starting, waiting 137 seconds for it to complete.

May 15, 2020 11:32:07.606 [0x7f3f0a7fc700] DEBUG - Sync: uploadStatus

May 15, 2020 11:32:07.755 [0x7f3ec0ff9700] WARN - Waited one whole second for a busy database.

May 15, 2020 11:32:08.766 [0x7f3ec0ff9700] ERROR - Failed to begin transaction (../Library/MetadataItem.cpp:8430) (tries=2): Cannot begin transaction. database is locked

May 15, 2020 11:32:09.076 [0x7f3e977fe700] DEBUG - [com.plexapp.agents.none] Plug-in is starting, waiting 136 seconds for it to complete.

May 15, 2020 11:32:10.152 [0x7f3e977fe700] DEBUG - [com.plexapp.agents.none] Plug-in is starting, waiting 135 seconds for it to complete.

May 15, 2020 11:32:10.802 [0x7f3ec0ff9700] WARN - Waited one whole second for a busy database.

May 15, 2020 11:32:11.304 [0x7f3e977fe700] DEBUG - [com.plexapp.agents.none] Plug-in is starting, waiting 134 seconds for it to complete.

May 15, 2020 11:32:11.813 [0x7f3ec0ff9700] ERROR - Failed to begin transaction (../Library/MetadataItem.cpp:8430) (tries=3): Cannot begin transaction. database is locked

May 15, 2020 11:32:12.482 [0x7f3eb2ffd700] DEBUG - Request: [127.0.0.1:52614 (Loopback)] GET /identity (37 live) Signed-in

May 15, 2020 11:32:12.483 [0x7f3f35f44700] DEBUG - Completed: [127.0.0.1:52614] 200 GET /identity (37 live) 0ms 398 bytes (pipelined: 1)

May 15, 2020 11:32:12.591 [0x7f3f35f44700] DEBUG - Auth: authenticated user 1 as savantali

May 15, 2020 11:32:12.592 [0x7f3ea7fff700] DEBUG - Request: [192.168.2.1:37262 (WAN)] PUT /myplex/refreshReachability (37 live) TLS Signed-in Token (savantali)

May 15, 2020 11:32:12.592 [0x7f3ea7fff700] DEBUG - MyPlex: Requesting reachability check.

May 15, 2020 11:32:12.592 [0x7f3ea7fff700] DEBUG - HTTP requesting PUT https://plex.tv/api/servers/2214124c5488f8f19fc43c294c95e5b486b7611b/connectivity?X-Plex-Token=xxxxxxxxxxxxxxxxxxxx&asyncIdentifier=fecc36d9-8fb9-48b8-8d8e-9c93051df5cf

May 15, 2020 11:32:12.608 [0x7f3e977fe700] DEBUG - [com.plexapp.agents.none] Plug-in is starting, waiting 133 seconds for it to complete.

May 15, 2020 11:32:13.290 [0x7f3ea7fff700] DEBUG - HTTP 200 response from PUT https://plex.tv/api/servers/2214124c5488f8f19fc43c294c95e5b486b7611b/connectivity?X-Plex

I don’t think I have done anything out of the norm (besides running this in docker compared to on the host directly).

Haven’t minimized resources at the docker level, so it should have plenty of resources based on the host.

$ cat /proc/meminfo

MemTotal: 6088416 kB

MemFree: 139152 kB

MemAvailable: 3586160 kB

Buffers: 347064 kB

Cached: 1883892 kB

SwapCached: 118620 kB

Active: 2607060 kB

Inactive: 2748608 kB

Active(anon): 1149416 kB

Inactive(anon): 762928 kB

Active(file): 1457644 kB

Inactive(file): 1985680 kB

Unevictable: 18984 kB

Mlocked: 18984 kB

SwapTotal: 4194300 kB

SwapFree: 2986356 kB

Dirty: 15824 kB

Writeback: 0 kB

AnonPages: 3120252 kB

Mapped: 420472 kB

Shmem: 6096 kB

KReclaimable: 301484 kB

Slab: 451724 kB

SReclaimable: 301484 kB

SUnreclaim: 150240 kB

KernelStack: 14112 kB

PageTables: 32900 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 7238508 kB

Committed_AS: 15203036 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 30684 kB

VmallocChunk: 0 kB

Percpu: 3568 kB

HardwareCorrupted: 0 kB

AnonHugePages: 190464 kB

ShmemHugePages: 0 kB

ShmemPmdMapped: 0 kB

FileHugePages: 0 kB

FilePmdMapped: 0 kB

CmaTotal: 0 kB

CmaFree: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 0 kB

DirectMap4k: 935808 kB

DirectMap2M: 5355520 kB

DirectMap1G: 2097152 kB

4 of the 8 cores are available to the host.

$ cat /proc/cpuinfo

processor : 0

vendor_id : AuthenticAMD

cpu family : 23

model : 1

model name : AMD Ryzen 7 1700 Eight-Core Processor

stepping : 1

microcode : 0x8001138

cpu MHz : 2994.375

cache size : 512 KB

physical id : 0

siblings : 1

core id : 0

cpu cores : 1

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl tsc_reliable nonstop_tsc cpuid extd_apicid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 xsaves clzero arat overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass

bogomips : 5988.75

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

=== START OF INFORMATION SECTION ===

Vendor: VMware

Product: Virtual disk

Revision: 2.0

Compliance: SPC-4

User Capacity: 214,748,364,800 bytes [214 GB]

Logical block size: 512 bytes

LU is fully provisioned

Rotation Rate: Solid State Device

Device type: disk

Local Time is: Fri May 15 14:19:43 2020 PDT

SMART support is: Unavailable - device lacks SMART capability.

Read Cache is: Unavailable

Writeback Cache is: Unavailable

You have Plex, in a Docker container in a VMware VM ?

I have plex in a docker container in a vmware, on an esxi host. Yes.

sudo docker stats plex --no-stream

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

da66b62c575d plex 23.13% 795.9MiB / 5.806GiB 13.39% 22.2MB / 267MB 172MB / 197MB 168

What is the guest OS host?

Base OS Host is listed in the first post:

Host: Ubuntu 20.04 LTS 4 Core CPU, 6GB RAM

Forgive me, you don’t need docker.

You already have a closed system (the ESXi host)

That be as it is,

Where is the VMDK ?

also, look here please

cpu cores : 1

you need more than one core

The reason for docker isn’t for security/containment, but an overall effort to move most/all services from bloated VMs to containerized images. As well as a learning effort to learn more about docker.

There was no limitation added do the docker host to state only one CPU Core. I used the command and variables listed here (adding a few volume mounts: https://hub.docker.com/r/plexinc/pms-docker). If you look at the docker insepect file:

"CpuShares": 0,

"NanoCpus": 0,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"CpuCount": 0,

"CpuPercent": 0,

According to docker documentation: By default, each container’s access to the host machine’s CPU cycles is unlimited.

Since I didn’t set a constraint, it should be seeing four cpu cores.

If I exec into the container, I can see that the container has access to all 4 cores:

@apollo:~$ sudo docker exec -it plex bash

root@apollo:/# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 4

On-line CPU(s) list: 0-3

Thread(s) per core: 1

Core(s) per socket: 1

Socket(s): 4

NUMA node(s): 1

Vendor ID: AuthenticAMD

CPU family: 23

Model: 1

Model name: AMD Ryzen 7 1700 Eight-Core Processor

Stepping: 1

CPU MHz: 2994.375

BogoMIPS: 5988.75

Hypervisor vendor: VMware

Virtualization type: full

L1d cache: 32K

L1i cache: 64K

L2 cache: 512K

L3 cache: 16384K

NUMA node0 CPU(s): 0-3

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl tsc_reliable nonstop_tsc cpuid extd_apicid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 xsaves clzero arat overflow_recov succor

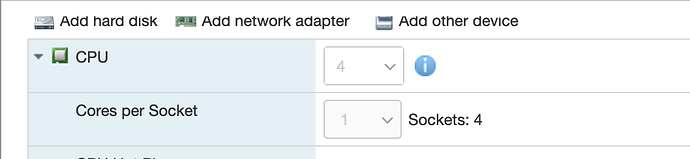

But looks like it sees it as individual sockets compared to a single socket and 4 cores.

Thanks for pointing that out to me, I wouldn’t have thought to look there. I will try and and see what I can do to fix that.

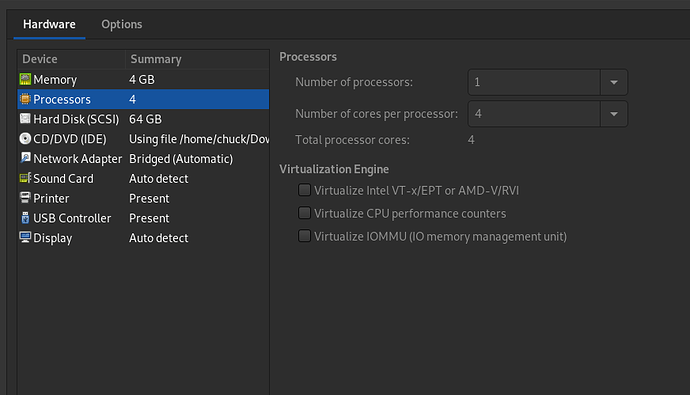

VMware will see 1 CPU with X cores.

To configure that VM you give it exactly that

1 CPU

4 cores

This VM has 4 cores assigned out of my possible 8

Yeah, I am familiar with that, but, I wouldn’t have gone out of my way to configure it in such a out of the box way.

This is a brand new server and guest VMs. The baremetal OS is esxi 7.0, so I am wondering if something has changed specifically on how that is interpreted when creating VMs now. I have corrected the CPUs for Plex. I will need to do the same to the others on the esxi server. Will check to see if that corrects the problem from here on out. I didn’t notice this issue on my last setup.

I can’t help with ESXi itself. Sorry, but that really is one for their forums.

No worries.

It wa originally this:

I have already corrected the socket vs cpu per socket.

@apollo:~$ sudo lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 45 bits physical, 48 bits virtual

CPU(s): 4

On-line CPU(s) list: 0-3

Thread(s) per core: 1

Core(s) per socket: 4

Socket(s): 1

NUMA node(s): 1

Vendor ID: AuthenticAMD

CPU family: 23

Model: 1

Model name: AMD Ryzen 7 1700 Eight-Core Processor

Stepping: 1

CPU MHz: 2994.375

BogoMIPS: 5988.75

Hypervisor vendor: VMware

Virtualization type: full

L1d cache: 128 KiB

L1i cache: 256 KiB

L2 cache: 2 MiB

L3 cache: 16 MiB

NUMA node0 CPU(s): 0-3

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl and seccomp

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Full AMD retpoline, IBPB conditional, STIBP disabled, RSB filling

Vulnerability Tsx async abort: Not affected

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_go

od nopl tsc_reliable nonstop_tsc cpuid extd_apicid pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_le

gacy extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xge

tbv1 xsaves clzero arat overflow_recov succor

Just need to remember this for future guest VMs.

Will see if it continues to lock up from here going forward.

I have 12 VMs running concurrently on this NUC8.

All have 1 CPU / 4 Core config.

Zero issues.

Nevermind, I see you were pulling it from procinfo, even if it said 1 cpu core, it is still 4 CPUs on the host.

Remember, it’s what the guest OS has access to. How we get there doesn’t matter.

what we end up with does and that’s why I pointed out the single-core status.

Yeah, doing that change did not result in any difference:

May 15, 2020 15:48:16.309 [0x7f8825ffb700] DEBUG - Request: [127.0.0.1:48404 (Loopback)] GET /:/plugins/com.plexapp.agents.lastfm/messaging/function/TWVzc2FnZUtpdDpHZXRBcnRpc3RFdmVudHNGcm9tU29uZ2tpY2tCeUlk/Y2VyZWFsMQoxCmxpc3QKMApyMAo_/Y2VyZWFsMQoxCmRpY3QKMQpzMzYKZDYyODVkOTUtOGVlMS00ZGUyLThjZWItZmY5MDA5OGFiMWQxczExCmFydGlzdF9tYmlkcjAK (20 live) GZIP Signed-in Token (savantali)

May 15, 2020 15:48:16.309 [0x7f8825ffb700] DEBUG - [com.plexapp.agents.lastfm] Sending command over HTTP (GET): /:/plugins/com.plexapp.agents.lastfm/messaging/function/TWVzc2FnZUtpdDpHZXRBcnRpc3RFdmVudHNGcm9tU29uZ2tpY2tCeUlk/Y2VyZWFsMQoxCmxpc3QKMApyMAo_/Y2VyZWFsMQoxCmRpY3QKMQpzMzYKZDYyODVkOTUtOGVlMS00ZGUyLThjZWItZmY5MDA5OGFiMWQxczExCmFydGlzdF9tYmlkcjAK

May 15, 2020 15:48:16.309 [0x7f8825ffb700] DEBUG - HTTP requesting GET http://127.0.0.1:40553/:/plugins/com.plexapp.agents.lastfm/messaging/function/TWVzc2FnZUtpdDpHZXRBcnRpc3RFdmVudHNGcm9tU29uZ2tpY2tCeUlk/Y2VyZWFsMQoxCmxpc3QKMApyMAo_/Y2VyZWFsMQoxCmRpY3QKMQpzMzYKZDYyODVkOTUtOGVlMS00ZGUyLThjZWItZmY5MDA5OGFiMWQxczExCmFydGlzdF9tYmlkcjAK

May 15, 2020 15:48:16.313 [0x7f8797fef700] DEBUG - Request: [127.0.0.1:48410 (Loopback)] GET /services/songkick?uri=%2Fartists%2Fmbid%3Ad6285d95-8ee1-4de2-8ceb-ff90098ab1d1%2Fcalendar.json (21 live) GZIP Signed-in Token (savantali)

May 15, 2020 15:48:16.360 [0x7f88277fe700] WARN - Waited one whole second for a busy database.

May 15, 2020 15:48:16.369 [0x7f87a57fa700] DEBUG - [Notify] Now watching "/data/rclone/gmusic/Fiona Apple"

May 15, 2020 15:48:16.499 [0x7f8827fff700] DEBUG - Completed: [127.0.0.1:48410] 200 GET /services/songkick?uri=%2Fartists%2Fmbid%3Ad6285d95-8ee1-4de2-8ceb-ff90098ab1d1%2Fcalendar.json (21 live) GZIP 186ms 4584 bytes

May 15, 2020 15:48:16.550 [0x7f879e7fc700] DEBUG - Looking for path match for [/data/rclone/gmusic/X/1980 - X - Los Angeles/14 - X - Los Angeles [Dangerhouse Version].mp3]

May 15, 2020 15:48:16.550 [0x7f88267fc700] DEBUG - Done with metadata update for 44709

--

May 15, 2020 15:48:17.626 [0x7f87957ea700] DEBUG - Setting container serialization range to [0, 15] (total=-1)

May 15, 2020 15:48:17.626 [0x7f882cec5700] DEBUG - Completed: [192.168.2.1:51700] 200 GET /hubs/home/onDeck?includeExternalMedia=1 (19 live) TLS GZIP Page 0-15 332ms 1851 bytes (pipelined: 5)

May 15, 2020 15:48:17.742 [0x7f87a57fa700] DEBUG - [Notify] Now watching "/data/rclone/gmusic/Fire! Orchestra"

May 15, 2020 15:48:17.910 [0x7f879e7fc700] WARN - Held transaction for too long (../Library/MetadataItem.cpp:3590): 1.390000 seconds

May 15, 2020 15:48:17.910 [0x7f879e7fc700] DEBUG - Native Scanner: Executed Add to Database stage in 30.72 sec.

May 15, 2020 15:48:17.919 [0x7f87d77fe700] DEBUG - Request: [127.0.0.1:48468 (Loopback)] GET /:/metadata/notify/changeItemState?librarySectionID=3&metadataItemID=45398&metadataType=10&state=-1&mediaState=analyzing (20 live) GZIP Signed-in Token (savantali)

May 15, 2020 15:48:17.920 [0x7f882cec5700] DEBUG - Completed: [127.0.0.1:48468] 200 GET /:/metadata/notify/changeItemState?librarySectionID=3&metadataItemID=45398&metadataType=10&state=-1&mediaState=analyzing (20 live) GZIP 0ms 166 bytes

May 15, 2020 15:48:17.920 [0x7f88277fe700] DEBUG - Loaded metadata for Young Thug (ID 44734) in 1530.000000 ms

May 15, 2020 15:48:17.920 [0x7f88277fe700] DEBUG - There was a change for metadata item 44734 (Young Thug), saving.

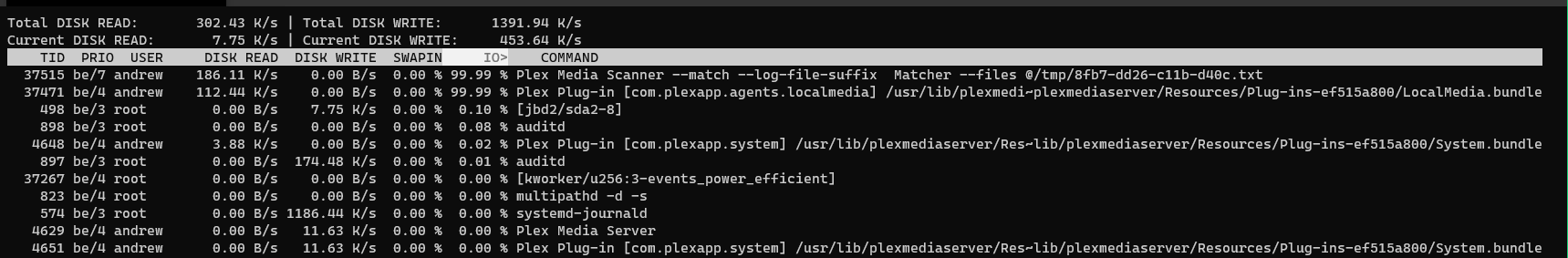

Disk IO shouldn’t be a problem here, but it seems like plex is getting stuck at 99% sometimes:

.

After looking around, it seems like this is very similar to my issue: Plex server stop and Sqlite3: Sleeping for 200ms to retry busy DB is reported in the Log file

I am running everything off of an NVME ssd, ECC RAM, and the CPU as shown. Seems like this may be an optimization problem with the application itself.

Look at the I/O. It’s saturated.

Where is the VMDK for this? Over the LAN?