For about 2 years now I’ve been running my PMS with media data on WD golds in Raid 5, metadata on a dedicated Samsung Evo 500gb SSD, and running all transcoding to a Startech M.2 Raid 0 card loaded with 2 super cheap 500gb M.2 SSDs (don’t even remember the brand). I had tried going the whole RAMdisk route, but I found it was just more of a headache than it was worth even with an 8GB RAMdisk (out of 32GB ddr4, and at most 1 transcode at a time). I bought the cheap M.2 SSDs for something like $22/piece, so if they die… So be it.

Ive been having increasing issues with PMS clients being able to play my media, like it’s degrading. But I run checks daily in snapraid and stablebit scanner and everything looks great. Samsung magician is also reporting everything is good. My metadata TBW is only 8.4TB after 2 years of service, which is estimated at 2% life. By contrast, i have an identical drive for personal files that’s the same age and at 2.4TB… So not bad in my opinion. The problem comes in with these cheap M.2 drives, because there’s no way to get drive health or SMART data through the Startech raid card. I have no idea if these things are dying or not. I don’t even know how to check it since i don’t have another available M.2 slot to use on my board.

So i was thinking of just scraping them and getting a low(ish) capacity MLC or possibly SLC Samsung SSD for transcoding, just so i can get all the drive health and so i can be confident that I’m not running garbage hardware. Just something that’s still economical. I know it’s “bad” to run SSDs for a transcoding drive due to their finite life, but I’m just wondering how long you could realistically expect to get out of a setup like that. If the price is right, i don’t even care about considering replacing these drives as a “maintenance” item. I can just get a hot swap front bay to make it easy. Unless running a high rpm spinning disk for this task is a feasible option, i don’t see a way around this.

Transcoding temp on ssd is pointless.

Unless you use disks that are the speed of floppy disks, any old 500 gig hdd will work.

the bottleneck is not the transcoded temp, but either your cpu, or your upload bw.

RAM disk is not for speed, but to avoid write wear on ssd system/plex data volumes.

And you should only use RAM disk of 16+ gig (or much more for many streams or dvr recording).

The simple way to see if your m2 are failing/slowing from write wear, is point the transcoding temp somewhere else.

Yeah i don’t know about all that.

Will transcoding to a HDD work? Of course. Is it going to have the same real world performance as transcoding to an SSD? Not a chance.

Even the highest rated consumer HDDs don’t come near rated specs in real world tests. My WD Gold Helium HDDs rank at the top of user benchmark and they don’t even crack 200MBps sequential read/write. Those were $320/piece pre-COVID, so there’s not a chance I’d put something that high end in for a transcoding HDD. Reds and Blues barely crack 100MBps, if even that. That’s not even enough to saturate a 1 Gbit LAN, and I’m setting up 2.5Gbit LAN throughout my house.

Cache is way too small to handle a large transcoding task, and then spin up time becomes an issue real quick. Reds, being a low power HDD, can take up to 6s to reach peak RPM. The more discs, the longer it’ll take. The larger the discs, the longer it’ll take. That’s why I was thinking small capacity 2.5" HDD if I did do a HDD transcoding drive. I know a few seconds doesn’t sound like much, but it gets old fast. I ran transcoding off a mechanical disc when i first setup my PMS, and I dealt with all these issues. However that was a larger capacity Blue that was being shared.

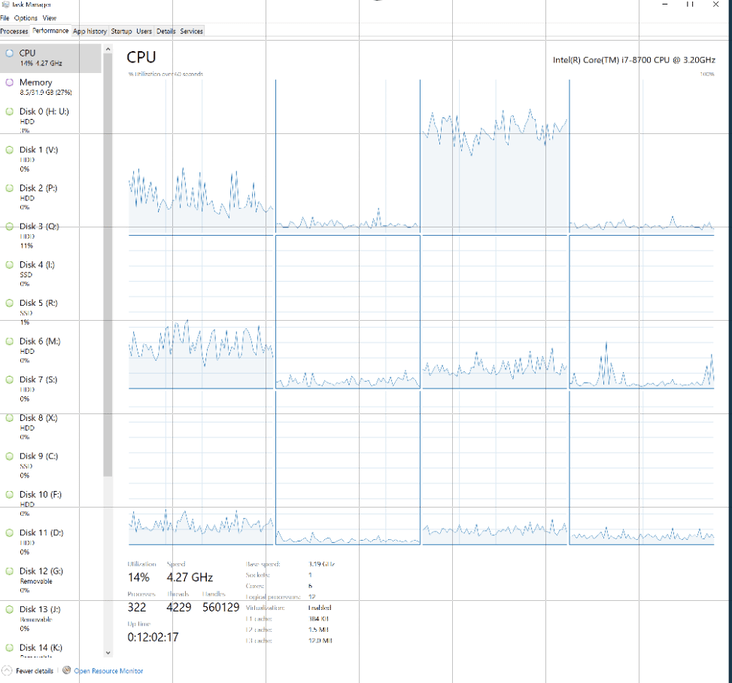

CPU is definitely not the bottleneck because (a) I transcode on my 2060KO, and (b) I run an i7-8700 anyway. Either of those two could easily handle the transcoding tasks without breaking a sweat, and there’s tons of room on the PCIE lanes for that kind of data.

Ive never heard anyone suggest running 16GB RAMdisk before. Even 8GB was considered a lot when I set mine up, especially for my use case of single transcode tasks. I’m bottlenecked at 2 transcodes on the 2060KO anyway (I know it can be unlocked, but I have no need). I was having issues with maintenance of the RAMdisk. It isn’t as simple as setting up an SSD to handle the task, and I just didn’t have the time to deal with the BS with it for essentially no gain. I didn’t set it up for speed. I set it up to not burn through an SSD quickly. But then i had the brilliant idea to just use throw away SSDs, and I chose Raid 0 to extend their life as well. I just can’t tell WTF is going on with those drives now is all.

I hadn’t actually thought about just changing the transcoding directory to another existing disc. I might have enough room on my other Samsung EVO to do this, so I’ll give that a try and see if that fixes the issue. And if it does, i guess i probably burned through the crappy M.2 drives already. Though 2 years isn’t terrible for $44…

size of the ram disk affects how much plex can prebuffer the transcode before it goes out to clients. 8 gig of temp ram/disk space is not a lot to share between several streams (or live tv/dvr recording buffers).

I did not mean to imply that your specific cpu/system was cpu bottlenecked, only that:

-

if the cpu/gpu can’t keep with the base transcode, then obviously disk speed is irrelevant.

-

as long as the transcode temp disk is faster than your upload speed, it does not matter, the bottleneck is how fast the data can be sent to your client(s).

disk sleep/spin up would only matter at the start of a stream, on an otherwise sleeping/idle system.

if you are streaming 6+ high bitrate uploads, on a super fast fiber link, then maybe… maybe, will transcoder disk speed have any relevance.

and if you are doing that kind of heavy serving, well then you are probably not considered ‘typical’.

in any case, a cheap $20 64 gig generic sata ssd would suffice, and it would allow for your smart monitoring.

I don’t know what you’re talking about with live TV & DVR (is that a feature in Plex now?). I only use Plex for my personal library, and 99.9% of the time it’s 1 transcode going at a time. I have an extensive 4k library and several clients that cannot play native 4k, so transcoding is a daily occurrence. It’s mostly coming from my wife who works from home and watches movies all day while working.

The transcoding can get data intensive for 4k, but it’s still just 1 transcoding stream at a time. Some people seem to be under the impression that it’s bad to transcode, but it shouldn’t be at all. I think it’s bad to waste valuable space on expensive HDDs for storing multiple versions of the same movies, which seems to often be the suggested alternative with Plex. I’ve had to resort to creating “optimized versions” of a couple movies in my library because they won’t play at all now on certain clients. I don’t want to keep having to do that, so i need to figure this out. I’m going to try switching the transcoding directory and see if that changes anything.

Then you can use any old hard drive for the transcoder temp folder and won’t notice that much difference. (a non-“shingled” one, that is)

Particularly when you slap a bit more RAM into the server, which will be used automatically by the operating system to cache access to hard drives.

And of course you don’t want to spin down this hard drive when idle.

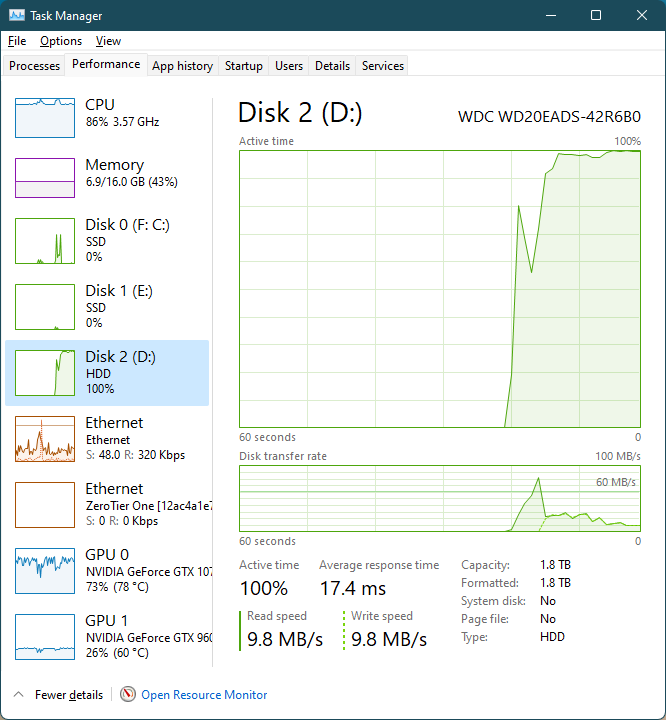

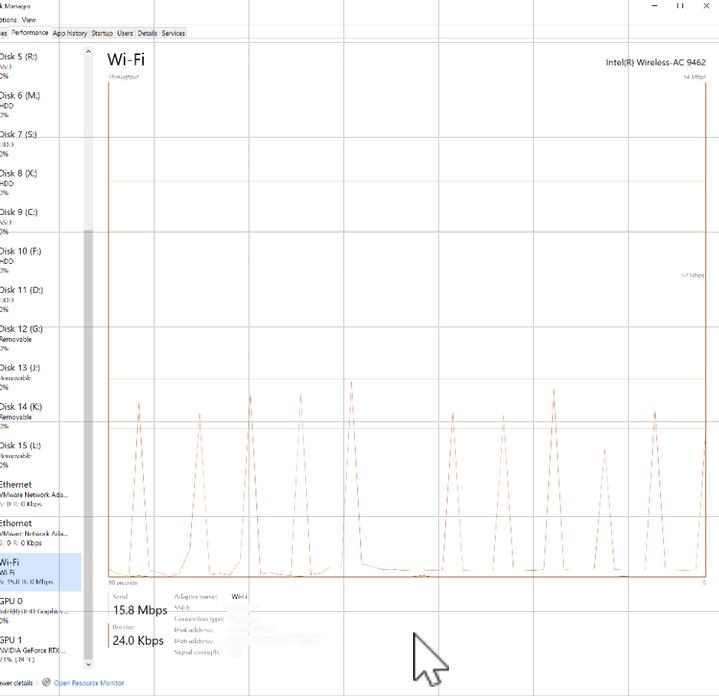

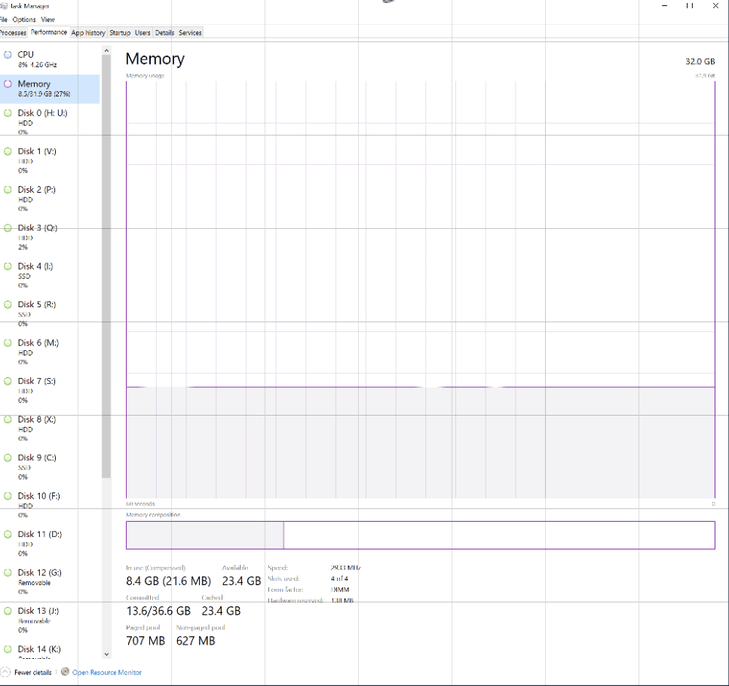

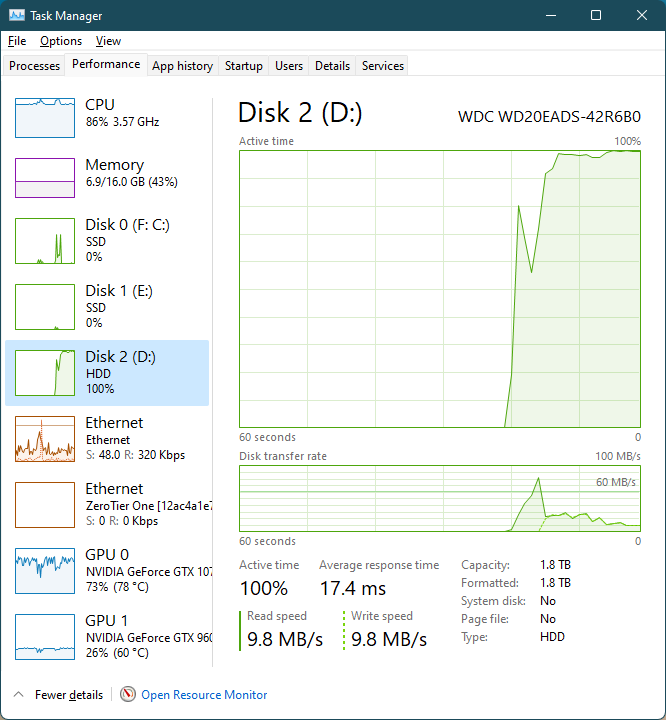

since this topic is tagged server-windows, one simple thing you can do, is look at your windows task manager or resource manager, which will show each disk read/write load.

if for some reason you see drives at 100% constantly, then there could be some kind of disk bottleneck.

otherwise, again, transcoding temp disk speed normally has ZERO influence on any other problems you may be experiencing.

and if you are having some kind of problems, then maybe post about that, with logs, screenshots of your pms dashbard, and specific hardware/software details about the server, the client(s) having problem(s), and the network setup.

example of windows task manager after starting a defrag (note this is not my pms server, i using linux for that).

at the bottom of the task manager, you can click resource manager to get even more details about system loads.

similarly, on linux, you can use htop or other similar tools to see disk io bottlenecks, like in this example below, the “D” indicates the process is delayed/waiting on disk io.

A bit more RAM? You’re not seriously suggesting 32gb is insufficient ram for a Plex server are you?

That depends on whether you are also using software RAID/“storage spaces” on this server or not.

I’ve found that posting about PMS issues and troubleshooting here is pretty much useless. Posts often to unanswered. And the ones that get answers, get useless unrelated answers or empty promises of a fix from Plex. I pretty much gave up trying to get any real answers on this board a long time ago. This post is much more basic, as it was just asking for others experiences.

I’m using snapraid and drivepool, and 32gb is way more than enough

I would also suggest changing task manager cpu to show logical cores, just because windows may not show 100% overall cpu usage, does not mean that there isn’t an overloaded/100% core.

similarly, if you had ram utilization issues, task manager would show how much was in use.

I don’t know how many different way to say I don’t have any issues with ram, cpu, gpu, etc. This system is way over-spec’d for a PMS. I originally built the machine to be a surveillance server, then it fell into dual use when I discovered Plex. Then when the pandemic hit it also became my workstation and I was allocating 2 cores and 8gb ram to a VMWare VM for work. And with all three of those running simultaneously, I still had no issues on this exact hardware.

It wasn’t until more recently that I began having these Plex media playback issues. I’ve had a number of Plex issues in the past, so i chalked it up to being just another plex bug. But the behavior has had me wondering if it is drive failure, and that’s where I’m at now.

My company has since issued me a laptop, so I no longer use the VM. And my wife and I have moved since I originally built this, and I haven’t had a chance to reinstall my security cameras yet, so that system has been offline for about 9 months now as well. The server is essentially entirely dedicated to Plex at the moment. And just to be clear, I’m using the term “server” very loosely here (if that wasn’t obvious enough by the cpu/gpu). This is just a consumer grade ATX build, not rack-mounted or headless.

Here are some screenshots of resource utilization anyway. As you can see, I’m not even close to maxing out cpu or ram. Gpu is pegged for the first 300s per my settings in Plex. This is transcoding a single 4k hevc movie to 720p. Sorry screen shots are terrible; i did it on my phone via remote desktop client. CPU isn’t being used for Plex transcoding at all. Ram usage is low (i think i even have a browser with like 30 tabs open now too). NIC spikes up to 20Mbps. Nothing to suggest a hardware bottleneck that I can see.

And before this even gets brought up, yes i am (temporarily) on Wi-Fi because i recently moved and haven’t built out the LAN in my new house yet. Still trying to workout the best location for cable drops. The problems began well before we moved, while the server was hard-wired. I already have my primary AX86U setup (going to do ai-mesh with wired back haul + 2.5Gbit switches), and I get good enough speeds on this board’s AC NIC to stream 4k movies to my clients for the time being. Though I have an AX NIC coming in the mail anyway.

My PMS is on Ubuntu and I have the transcoder directory set to /dev/shm, which is Ubuntu’s automagic size-changing RAM disk. Leaving all other settings on automatic I found that a Quick Sync transcode of a 4k HDR file consumed about 700 MB of RAM disk space.

As my 8 GB RAM server has about 1.5 GB in use at baseline, I found I could do several transcodes with no problem. Since never have more than 2-3 streams going this has worked out well for me.

That sounds about right for what i was experiencing. I think i was pushing 1GB when I was watching it live before, but I wasn’t even close to hitting 8GB.

BTW, switching the transcode directory to my Samsung Evo SSD didn’t help the issue whatsoever. I also ran a series of tests on Crystal Disk Mark on the M.2 Raid 0 SSDs I had been using for transcoding, and everything seems to look fine if the results are accurate. It’s not as fast a the Evo, but its close. It is considerably faster than my WD Purple, which is the only spinning disk I had for comparison that wasn’t currently in use. Sounds like these M.2s are fine after all 🤷 But now I’m back to not knowing why I’m having media playback degradation.

What exactly is the issue you see?

3 Likes

A RAM disk is truly the best way to go if you have enough RAM. I personally use a 12gb RAM disk, with a 900 sec buffer. There is no wear and tear you have to worry about. With that being said, based on your particular scenario, a single drive will first saturate you network connection before it would hit it’s maximum sequential read or write throughput. A single HDD (let’s say the WD Gold) can easily read/write more than 100MB/s, which would saturate a 1gb link. If you aren’t seeing those speeds, then there is a bottleneck somewhere. But since you are just transcoding, you don’t even need those speeds. What are are you encountering that’s pointing to disk related issues?

2 Likes

I have to agree with @BanzaiInstitute here… You haven’t actually stated what the problem is.

You have just said “media playback degradation” or similar.

Perhaps you could give a bit more detail in what your clients are actually experiencing, and whether or not any of them are Direct Playing or Transcoding.

In regards to your transcode area, as a number of people have said here, using RAM for your transcode area is by far the most efficient and non destructive way to do it.

I was using a 16GB RAM Disk for a few years and never had any problems, although I did get close to filling it a few times with 3 transcode streams going, all of which were quite long movies.

I’ve recently put more RAM in my server and therefore now run a 24GB RAM disk.

But all of that is irrelevant until you tell us what you are actually experiencing, and which clients you are using, and which media is problematic.

Is it all media?

Is it just some with specific formats / subtitles etc?

Is it some specific clients?

What clients are they?

Is this with remote clients or local, or both?

Only once we know more about the client end, can we even begin to consider whether or not this is a server side problem.

1 Like