Trying to wrap my head around 4k and 2160p and all that jazz.

Does Plex somehow “know” the native resolution of my video device, so that it can transcode a video stream to a lower resolution as needed?

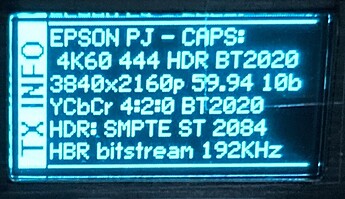

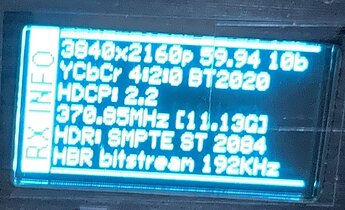

I guess this is made more complex by the myriad video resolution standards. For a specific example, I’m using the PMS and Plex Player on an NVidia Shield Pro, which is plugged into an Epson UB5050 projector via HDMI. The Epson is an “UHD-PRO” Projector. As far as I know, while it claims to be 4K in its marketing propaganda, this projector really just uses a video processing chip that is inherently 1920 x 1080p, and fires each pixel twice, shifting the second fire slightly, so that while it’s not native 4K, it kinda fakes it out. I presume this fakery means that the projector ultimately hardware-downsamples any content that is in a resolution higher than 1920 x 1080p, down to its native 1920 x 1080p, before displaying it.

How does Plex optimize the video stream in this case? Does this mean if I have content that is stored in native 4K resolution, Plex will magically know to transcode it down to 1920 x 1080p before sending to my projector? Or does it not touch the video content, sending it un-modified to the video device, letting the video device deal with it? The latter seems wasteful if the content is much higher resolution than the device can handle, all those bits eating up wifi and computational bandwidth when they are ultimately discarded.