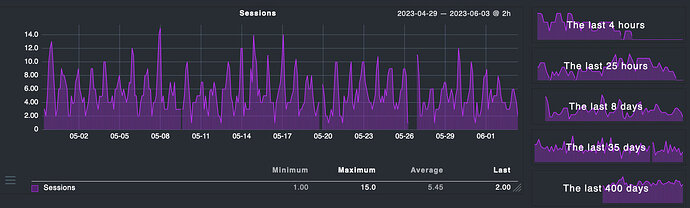

Summary: Started seeing this database busy error a couple of weeks ago. Had a hard drive on that system crash but the db was stored on a secondary drive so ti wasn’t affected, just lost the thumbnail stores. Since getting the system back up it’s had occasional hangs and I started taking note of when they would hit and i’ve narrowed it down to the library scans.

If I start the server up and let it run with library scans disabled I tend to have no issues. I have seen as many as 16 people connected at once with no issues. Within five minutes of running a full library scan these busy database messages start to appear and the webinterface hangs as do client connections.

OS: RHEL 9.2

RAM: 16G

HD: 1.5T free ( software raid1 ext4 | Samsung SSD 870 EVO)

Server Version#: 1.32.3.7089

DB Cache size: 2048

plex plex 3.6G May 31 11:12 com.plexapp.plugins.library.blobs.db

plex plex 1.2G May 31 11:24 com.plexapp.plugins.library.db

Plex Media Server Logs_2023-05-31_11-19-02.zip (4.3 MB)

I’ve run an integrity check on both and vacuum. No errors turned up. Went ahead and did a recovery and rebuild just to make sure that everything was clean. I’ve also tried various values on the db cache size but it doesn’t seem to make a difference. Any idea @ChuckPa