So, if I read those right, you can’t get the data you want from it.

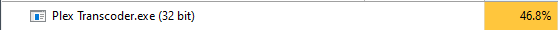

First Box Checked, second Box Unchecked = CPU is Encoding? (This is doubtful, considering the percentage I got, seems it’s actually just Decoding):

First Box Unchecked, Second Box Checked = CPU encoding AND decoding (This seems accurate):

Both Boxes Unchecked = CPU is encoding AND Decoding (Again, this seems accurate):

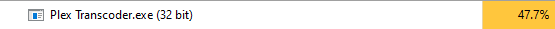

Both Boxes Checked = CPU is doing nothing, GPU is Encoding and Decoding (This also seems accurate):

Pretty sure their check boxes are not labeled properly or we can’t actually do what we are trying to do. I stand by my statement, decoding takes next to no power at all, as evidenced by simply playing back the file in any given media player. Hell even going x264 -> x265 and x265 -> x265 in handbrake has nearly identical frames per second encoding

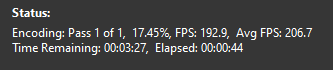

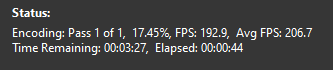

x264 -> x265 1080p:

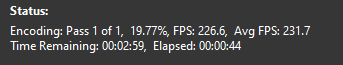

Same File

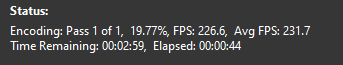

x265 -> x265 1080p:

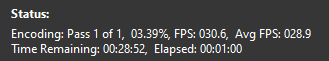

Granted this is using NVENC, but if there was a significant difference between decoding one or the other, you’d see an FPS drop, but you don’t, because decoding the video in either scenario is relatively easy, encoding to either x264 or x265 is the difficult part. Decoding 265 is definitely easier than encoding 264, all day long. I can specifically point to VC-1 vs AVC as a use case when decoding, whenever something is VC-1, I can’t seem to transcode at more than 150-200fps, but when it’s AVC I can transcode at 200-250, that directly because of the decoding difference between them, at least that’s what it seems to be from everything I’ve examined.

If you have evidence to the contrary, I am happy to look at it, but decoding x265 is a relatively low cpu intensive job, I just wish I could make it so you only see the decode on the CPU and the Encode on the GPU, that would settle it once and for all, but the only way you can get the CPU to only decode, is via playback with hardware decoding off.

I was more focused on OPs original question about transcoding the content from 4K to 720… for that, the encode part would be the most intensive imo.

I was more focused on OPs original question about transcoding the content from 4K to 720… for that, the encode part would be the most intensive imo.