Isnt proxmox debian though? So would I install apt install nvidia-driver? Because apt install nvidia-driver-550 on the host can’t be found.

OK, following this guide, Nvidia GPU passthrough in LXC :: TheOrangeOne I was able to install the official NVIDIA drivers and after rebooting, nvidia-smi works.lxc command for your script to run though. Update: Looks like the new command is pct

Update 2: Continuing to follow that guide, I actually have nvidia-smi working in the LXC. I had to remove nomodeset from the grub command line, blacklist the nouveau drivers in proxmox, and run nvidia-persistenced to create the /dev/nvidia-modeset character device. Next step is to connect my truenas NFS share and try plex.

Chuck, I was able to get Plex on the LXC but it doesn’t show the GPU in the list, yet nvidia-smi shows results. What steps should I try next?

EDIT: I finally got it!

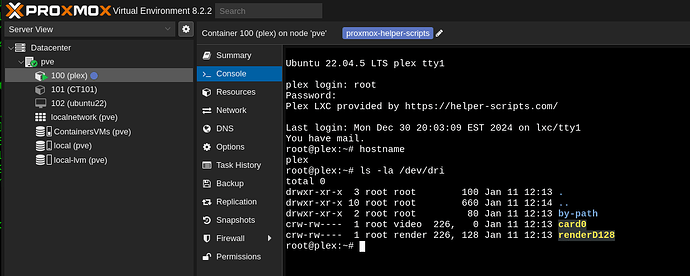

ctid=100 # change to plex container id

renderid=$(pct exec $ctid getent group render | cut -d: -f3)

pct set $ctid -dev0 /dev/dri/renderD128,gid=$renderid

pct reboot $ctid

That got the gpu to show up in the list.

Then I had to add the plex user to the lxc_shares group (to show my media. All from this guide: [TUTORIAL] - Tutorial: Unprivileged LXCs - Mount CIFS shares | Proxmox Support Forum

And it worked. Odd thing is, yes it shows (hw) in plex dashboard and my CPU is doing almost nothing, but nvidia-smi doesn’t show anything:

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.142 Driver Version: 550.142 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Quadro P400 Off | 00000000:81:00.0 Off | N/A |

| 34% 46C P0 N/A / N/A | 140MiB / 2048MiB | 1% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

+-----------------------------------------------------------------------------------------+

ChuckPa

January 11, 2025, 5:22pm

24

I used the ‘tteck’ helper scripts to create mine.

When you look at the config, this is what you see:

root@pve:~# pct config 100

arch: amd64

cores: 4

features: nesting=1

hostname: plex

memory: 4096

mp0: /glock,mp=/glock

net0: name=eth0,bridge=vmbr0,hwaddr=BC:24:11:64:46:69,ip=dhcp,type=veth

onboot: 1

ostype: ubuntu

rootfs: ContainersVMs:vm-100-disk-0,size=256G

swap: 512

tags: proxmox-helper-scripts

lxc.cgroup2.devices.allow: a

lxc.cap.drop:

lxc.cgroup2.devices.allow: c 188:* rwm

lxc.cgroup2.devices.allow: c 189:* rwm

lxc.mount.entry: /dev/serial/by-id dev/serial/by-id none bind,optional,create=dir

lxc.mount.entry: /dev/ttyUSB0 dev/ttyUSB0 none bind,optional,create=file

lxc.mount.entry: /dev/ttyUSB1 dev/ttyUSB1 none bind,optional,create=file

lxc.mount.entry: /dev/ttyACM0 dev/ttyACM0 none bind,optional,create=file

lxc.mount.entry: /dev/ttyACM1 dev/ttyACM1 none bind,optional,create=file

lxc.cgroup2.devices.allow: c 226:0 rwm

lxc.cgroup2.devices.allow: c 226:128 rwm

lxc.cgroup2.devices.allow: c 29:0 rwm

lxc.mount.entry: /dev/fb0 dev/fb0 none bind,optional,create=file

lxc.mount.entry: /dev/dri dev/dri none bind,optional,create=dir

lxc.mount.entry: /dev/dri/renderD128 dev/dri/renderD128 none bind,optional,create=file

root@pve:~#

You’ll need to add the nvidia entries as well . ( I’ve seen them posted here in the forum but not sure where )

I use these three. You might need a fourth (IIRC)

nvidia.driver.capabilities: all

nvidia.require.cuda: "true"

nvidia.runtime: "true"

I’ll have to try this. When I am hardware transcoding, and open nvidia-smi on the host, this is what I see:

root@pve:/etc/pve/lxc# nvidia-smi

Sat Jan 11 13:14:01 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 550.142 Driver Version: 550.142 CUDA Version: 12.4 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 Quadro P400 On | 00000000:81:00.0 Off | N/A |

| 34% 39C P0 N/A / N/A | 140MiB / 2048MiB | 33% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 346273 C ...lib/plexmediaserver/Plex Transcoder 135MiB |

+-----------------------------------------------------------------------------------------+

Which definitely looks correct.

ChuckPa

January 11, 2025, 6:32pm

26

That is absolutely correct.

So what’s not working?

Can you capture me a set of DEBUG logs (ZIP) right after it starts transcoded playback?

I tried your lxc config above and hw transcoding did not work, it fell back to software.

# Allow cgroup access

# Pass through device files

arch: amd64

cores: 4

dev0: /dev/dri/renderD128,gid=993

features: nesting=1

hostname: plex

memory: 8192

mp0: /media/truenas/videos/,mp=/media,ro=1

mp1: /media/truenas/photos/,mp=/photos,ro=1

net0: name=eth0,bridge=vmbr0,firewall=1,hwaddr=BC:24:11:C7:9D:12,ip=dhcp,ip6=dhcp,type=veth

ostype: ubuntu

rootfs: local-lvm:vm-102-disk-0,size=80G

swap: 1024

unprivileged: 1

lxc.cgroup2.devices.allow: c 195:* rwm

lxc.cgroup2.devices.allow: c 509:* rwm

lxc.mount.entry: /dev/dri/card0 dev/dri/card0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

lxc.mount.entry: /dev/nvram dev/nvram none bind,optional,create=file

Doing nvidia-smi on the host when it’s transcoding works. Doing it in the lxc, while shows an output, does not show any processes.

vm 102 - unable to parse config: nvidia.driver.capabilities: all

vm 102 - unable to parse config: nvidia.require.cuda: "true"

vm 102 - unable to parse config: nvidia.runtime: "true"

ChuckPa

January 11, 2025, 7:16pm

28

I use LXD / LXC,

The config looks like this:

[chuck@lizum ~.2000]$ lxc config show nvidia

architecture: x86_64

config:

image.architecture: amd64

image.description: ubuntu 22.04 LTS amd64 (release) (20240821)

image.label: release

image.os: ubuntu

image.release: jammy

image.serial: "20240821"

image.type: squashfs

image.version: "22.04"

nvidia.driver.capabilities: all

nvidia.require.cuda: "true"

nvidia.runtime: "true"

volatile.base_image: a3a8118143289e285ec44b489fb1a0811da75c27a22004f7cd34db70a60a0af4

volatile.cloud-init.instance-id: cbebf9f6-289d-4fde-af56-0bba1dfecd12

volatile.eth0.hwaddr: 00:16:3e:15:d5:5a

volatile.idmap.base: "0"

volatile.idmap.current: '[{"Isuid":true,"Isgid":false,"Hostid":1000000,"Nsid":0,"Maprange":1000000000},{"Isuid":false,"Isgid":true,"Hostid":1000000,"Nsid":0,"Maprange":1000000000}]'

volatile.idmap.next: '[{"Isuid":true,"Isgid":false,"Hostid":1000000,"Nsid":0,"Maprange":1000000000},{"Isuid":false,"Isgid":true,"Hostid":1000000,"Nsid":0,"Maprange":1000000000}]'

volatile.last_state.idmap: '[]'

volatile.last_state.power: STOPPED

volatile.uuid: 11e2f94c-1046-473c-b219-ae051f8aa797

volatile.uuid.generation: 11e2f94c-1046-473c-b219-ae051f8aa797

devices:

gpu:

gid: "110"

type: gpu

media:

path: /glock/media

readonly: "true"

source: /glock/media

type: disk

ephemeral: false

profiles:

- default

stateful: false

description: ""

[chuck@lizum ~.2001]$

Are you using TrueNAS Core (BSD) or are you using TrueNAS Scale (Linux) ?

What is your host OS? I don’t have the lxc command.

I am using TrueNAS Core (BSD).

ChuckPa

January 11, 2025, 7:46pm

30

I’m sorry, everything I’ve been sharing is for naught.

For you, you have Jails ?

Yes, I’m moving plex from a truenas jail to proxmox VM on a different server. I drastically expanded my homelab solution over the past few weeks to add to my proxmox and didnt want to interfere with messing with my truenas.

ChuckPa

January 12, 2025, 6:56pm

32

Setting up a new machine from scratch is the best bet. There’s little/nothing in common between the two except, perhaps, source media locations.

As for Prox and HW transcoding, while I don’t have a GPU card, I do have the iGPU support via those helper scripts which perform the passthrough commands

Very sorry but this is the best I can do is “Mr Google”…

https://www.reddit.com/r/Proxmox/comments/1cneob0/plex_lxc_gpu_passthrough/

system

April 12, 2025, 6:56pm

33

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.