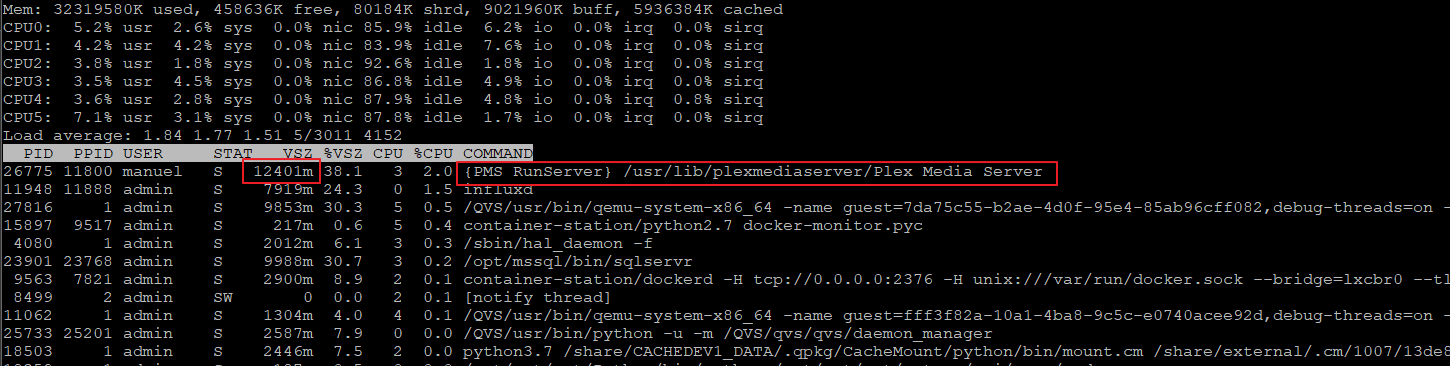

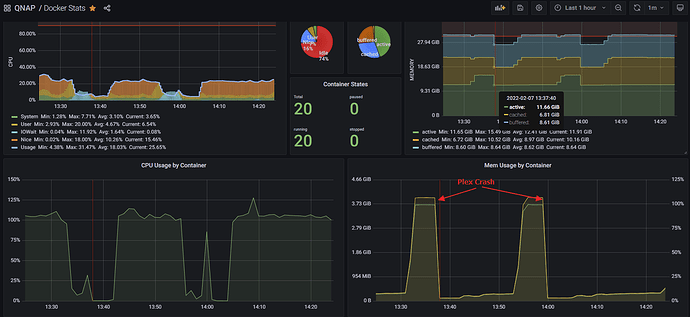

Today (Tonight) I also got this QuLog Message while Plex maintenance jobs were running.

And remember, there are 32GB (20GB available).

Here the last log lines from pms log while maintenance run.

Dec 09, 2021 21:58:32.207 [0x7f2943f74b38] ERROR - SSDP: Error parsing device schema for http://192.168.178.35:9080

Dec 10, 2021 01:02:23.983 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 4 images, but found 1

Dec 10, 2021 01:02:24.116 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 10 images, but found 0

Dec 10, 2021 01:03:42.928 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1577 images, but found 9

Dec 10, 2021 01:05:06.714 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1687 images, but found 181

Dec 10, 2021 01:06:32.044 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1743 images, but found 0

Dec 10, 2021 01:07:56.950 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1732 images, but found 0

Dec 10, 2021 01:09:15.771 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1597 images, but found 9

Dec 10, 2021 01:10:38.362 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1627 images, but found 8

Dec 10, 2021 01:11:59.133 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1667 images, but found 0

Dec 10, 2021 01:13:19.608 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1716 images, but found 0

Dec 10, 2021 01:14:38.406 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1657 images, but found 9

Dec 10, 2021 01:16:03.644 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 1868 images, but found 0

Dec 10, 2021 01:16:46.373 [0x7f29451e8b38] ERROR - BaseIndexFrameFileManager: expected 12021 images, but found 1830

Dec 10, 2021 01:16:46.687 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:16:46.687 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:16:54.716 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:16:54.716 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:16:54.717 [0x7f29451e8b38] INFO - CodecManager: obtaining decoder 'aac_lc'

Dec 10, 2021 01:17:02.823 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:02.823 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:10.411 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:10.411 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:18.206 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:18.207 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:25.956 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:25.956 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:33.701 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:33.701 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:41.604 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:41.604 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:54.739 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:17:54.739 [0x7f29451e8b38] WARN - MDE: unable to find a working transcode profile for video stream

Dec 10, 2021 01:42:16.770 [0x7f2943932b38] WARN - SLOW QUERY: It took 300.000000 ms to retrieve 8 items.

Dec 10, 2021 01:42:21.968 [0x7f2943afab38] WARN - Took too long (0.210000 seconds) to start a transaction on ../Statistics/StatisticsManager.cpp:249

Dec 10, 2021 01:42:22.161 [0x7f2943afab38] WARN - Transaction that was running was started on thread 0x7f29439e2b38 at ../Statistics/StatisticsManager.cpp:252

Dec 10, 2021 01:42:58.073 [0x7f2943b30b38] WARN - Took too long (0.110000 seconds) to start a transaction on ../Statistics/StatisticsResources.cpp:18

Dec 10, 2021 01:42:58.191 [0x7f2943b30b38] WARN - Transaction that was running was started on thread 0x7f294399cb38 at ../Statistics/StatisticsResources.cpp:18

Dec 10, 2021 01:43:44.455 [0x7f2943afab38] WARN - Held transaction for too long (../Statistics/StatisticsManager.cpp:249): 1.630000 seconds

Dec 10, 2021 01:43:55.104 [0x7f294516db38] WARN - Failed to set up two way stream, caught exception: write: protocol is shutdown

Dec 10, 2021 01:44:39.261 [0x7f2943afab38] WARN - Held transaction for too long (../Statistics/StatisticsManager.cpp:252): 1.060000 seconds

Dec 10, 2021 01:49:36.705 [0x7f2944b0bb38] ERROR - [EventSourceClient/pubsub] Retrying in 15 seconds.

Dec 10, 2021 01:54:48.674 [0x7f2944b0bb38] ERROR - [EventSourceClient/pubsub] Retrying in 30 seconds.

Dec 10, 2021 01:55:28.264 [0x7f29439e2b38] WARN - Took too long (0.120000 seconds) to start a transaction on ../Statistics/StatisticsManager.cpp:249

Dec 10, 2021 01:55:29.370 [0x7f29439e2b38] WARN - Transaction that was running was started on thread 0x7f29439e2b38 at ../Statistics/StatisticsManager.cpp:252

Dec 10, 2021 01:56:27.950 [0x7f2943b30b38] WARN - SLOW QUERY: It took 39870.000000 ms to retrieve 8 items.

Dec 10, 2021 01:56:32.218 [0x7f2943b30b38] INFO - It's been 21871 seconds, so we're starting scheduled library update for section 3 (Serien)