Server Version#: 1.13.6.5339

Player Version#: ATV/etc.

I have a Debian 9 server running my plex media server. I have everything installed on SSD with very little running on it. I notice quite a bit get WARN Slow queries on retrieving items and I can see slowness when refreshing things and general screen moving. I have a decent size library:

21744 files in library

0 files missing analyzation info

0 media_parts marked as deleted

0 metadata_items marked as deleted

0 directories marked as deleted

21696 files missing deep analyzation info.

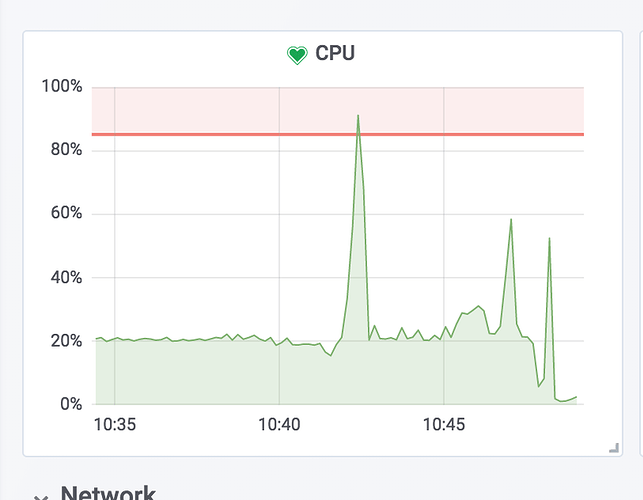

I run netdata/grafana so I can see no CPU bottlenecks nor any IO bottlenecks as the system is just about idle when things are going on. As an example, I’m running an Optimize now for some 4k stuff to 1080p and I’m seeing these:

Aug 29, 2018 10:01:43.025 [0x7fe7b0bfe700] WARN - SLOW QUERY: It took 460.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:43.037 [0x7fe7d47ff700] WARN - SLOW QUERY: It took 490.000000 ms to retrieve 50 items.

Aug 29, 2018 10:01:44.678 [0x7fe7d77fa700] WARN - SLOW QUERY: It took 230.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:44.683 [0x7fe7c67fe700] WARN - SLOW QUERY: It took 210.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:44.746 [0x7fe7d7ffb700] WARN - SLOW QUERY: It took 500.000000 ms to retrieve 50 items.

Aug 29, 2018 10:01:46.329 [0x7fe7d77fa700] WARN - SLOW QUERY: It took 220.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:46.344 [0x7fe7d47ff700] WARN - SLOW QUERY: It took 330.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:46.378 [0x7fe7d67f8700] WARN - SLOW QUERY: It took 430.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:46.385 [0x7fe7d97fe700] WARN - SLOW QUERY: It took 570.000000 ms to retrieve 50 items.

Aug 29, 2018 10:01:47.929 [0x7fe7dcfff700] WARN - SLOW QUERY: It took 1600.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:47.929 [0x7fe7c0bff700] WARN - SLOW QUERY: It took 1600.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:48.008 [0x7fe7d6ff9700] WARN - SLOW QUERY: It took 240.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:48.014 [0x7fe7d8ffd700] WARN - SLOW QUERY: It took 320.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:48.023 [0x7fe7d67f8700] WARN - SLOW QUERY: It took 280.000000 ms to retrieve 16 items.

Aug 29, 2018 10:01:48.040 [0x7fe7d5ff7700] WARN - SLOW QUERY: It took 400.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:48.064 [0x7fe7b0bfe700] WARN - SLOW QUERY: It took 570.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:48.077 [0x7fe7b8ffe700] WARN - SLOW QUERY: It took 570.000000 ms to retrieve 50 items.

Aug 29, 2018 10:01:49.752 [0x7fe7d47ff700] WARN - SLOW QUERY: It took 1650.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:49.877 [0x7fe7d5ff7700] WARN - SLOW QUERY: It took 330.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:49.879 [0x7fe7d97fe700] WARN - SLOW QUERY: It took 390.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:49.889 [0x7fe7c0bff700] WARN - SLOW QUERY: It took 390.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:49.903 [0x7fe7b8ffe700] WARN - SLOW QUERY: It took 520.000000 ms to retrieve 50 items.

Aug 29, 2018 10:01:51.792 [0x7fe7b8ffe700] WARN - SLOW QUERY: It took 290.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:51.794 [0x7fe7c67fe700] WARN - SLOW QUERY: It took 230.000000 ms to retrieve 1 items.

Aug 29, 2018 10:01:51.798 [0x7fe7d67f8700] WARN - SLOW QUERY: It took 260.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:51.805 [0x7fe7b53ff700] WARN - SLOW QUERY: It took 370.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:51.839 [0x7fe7b03fd700] WARN - SLOW QUERY: It took 400.000000 ms to retrieve 50 items.

Aug 29, 2018 10:01:53.655 [0x7fe7b97ff700] WARN - SLOW QUERY: It took 370.000000 ms to retrieve 50 items.

Aug 29, 2018 10:01:55.264 [0x7fe7bfbfd700] WARN - SLOW QUERY: It took 1630.000000 ms to retrieve 2 items.

Aug 29, 2018 10:01:55.350 [0x7fe7bfbfd700] WARN - SLOW QUERY: It took 260.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:55.362 [0x7fe7c67fe700] WARN - SLOW QUERY: It took 240.000000 ms to retrieve 4 items.

Aug 29, 2018 10:01:55.389 [0x7fe7d97fe700] WARN - SLOW QUERY: It took 550.000000 ms to retrieve 50 items.

plex.log.gz (32.2 KB)

I’ve attached a full log file and prior to running that, I ran a full rebuild using the method here:

https://support.plex.tv/articles/201100678-repair-a-corrupt-database/#toc-2 to make sure nothing odd was going on.