Please forgive my lack of ProxMox understanding – just got it and still learning.

- Setup PMS using the tteck script for PMS.

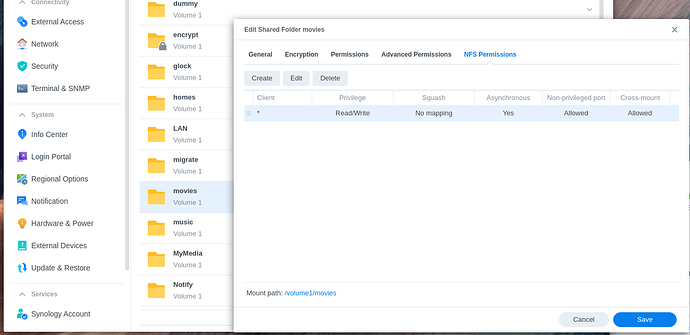

- I forget the step but enabled NFS (privileged?) and mounted media inside the container.

- Think (not certain) the tteck script did the HW passthrough for me.

- N100 AlderLake-N is working

For you:

-

ls -la /dev/dri– confirm the devices are there and take note of which groups own the hardware nodes (usually ‘video’ and ‘render’) -

groups plex– see if it’s a member of those groups -

When Plex starts, it will look for the hardware.

-

In your logs, it’s not detecting the passthrough -OR- plex doesn’t have permissions to the /dev/dri nodes by group membership

Sep 04, 2024 18:32:29.383 [126155812252472] DEBUG - [Req#367/Transcode] Starting a transcode session 37thqdk7kjmyj6gwyjg8jtkc at offset -1.0 (state=3)

Sep 04, 2024 18:32:29.383 [126155812252472] DEBUG - [Req#367/Transcode] TPU: hardware transcoding: enabled, but no hardware decode accelerator found

Sep 04, 2024 18:32:29.383 [126155812252472] INFO - [Req#367/Transcode] CodecManager: starting EAE at "/tmp/pms-6965dbce-863e-4ee8-8569-747e7c9d6cfb/EasyAudioEncoder"

Sep 04, 2024 18:32:29.383 [126155812252472] DEBUG - [Req#367/Transcode/JobRunner] Job running: "/var/lib/plexmediaserver/Library/Application Support/Plex Media Server/Codecs/EasyAudioEncoder-8f4ca5ead7783c54a4930420-linux-x86_64/EasyAudioEncoder/EasyAudioEncoder"

Sep 04, 2024 18:32:29.383 [126155812252472] DEBUG - [Req#367/Transcode/JobRunner] In directory: "/tmp/pms-6965dbce-863e-4ee8-8569-747e7c9d6cfb/EasyAudioEncoder"

Sep 04, 2024 18:32:29.383 [126155812252472] DEBUG - [Req#367/Transcode/JobRunner] Jobs: Starting child process with pid 4461

Sep 04, 2024 18:32:29.384 [126155812252472] DEBUG - [Req#367/Transcode] [Universal] Using local file path instead of URL: /data/TV-Shows/HD-TV-Shows/Futurama (1999) [imdb-tt0149460]/Futurama (1999) - S09E01 - The One Amigo [DSNP WEBDL-1080p][EAC3 5.1][h264]-FLUX.mkv

Sep 04, 2024 18:32:29.384 [126155812252472] DEBUG - [Req#367/Transcode] TPU: hardware transcoding: final decoder: , final encoder:

Sep 04, 2024 18:32:29.384 [126155812252472] DEBUG - [Req#367/Transcode/JobRunner] Job running: EAE_ROOT=/tmp/pms-6965dbce-863e-4ee8-8569-747e7c9d6cfb/EasyAudioEncoder FFMPEG_EXTERNAL_LIBS='/var/lib/plexmediaserver/Library/Application\ Support/Plex\ Media\ Server/Codecs/7592546-570471557d92948f58893deb-linux-x86_64/' X_PLEX_TOKEN=xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx