@ChuckPa I posted this on the Plex discord and was directed to post it in this forum thread here.

I upgraded my Plex Media Server on my Synology NAS and it crashes whenever I upload my backed up databases. Specifically, the blobs database file appears to be the issue.

I rolled back the plex update previously and that made the issue go away, but I decided to push forward with updating it since I also wanted to update my NAS to the latest version of its OS as well.

Current Plex Media Server Version#: PlexMediaServer-1.41.0.8992-8463ad060-x86_64_DSM72

Based on my Plex Media Server logs, it appears to be a sqlite issue with a duplicate column. Screenshot of the log:

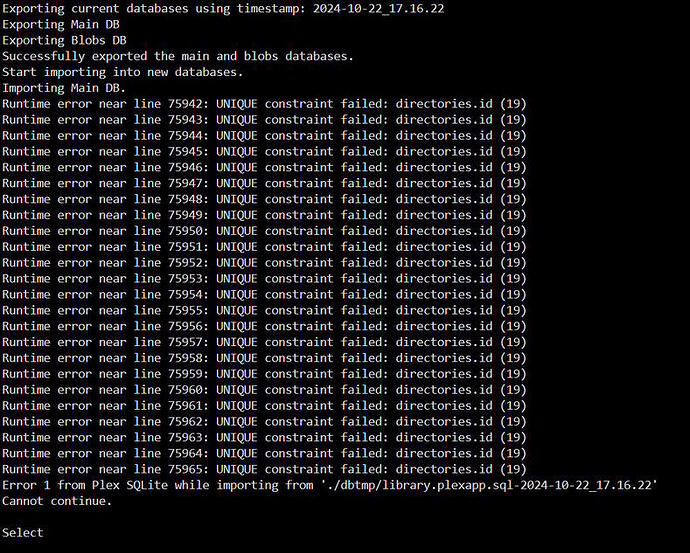

I tried to run the PlexDBRepair tool, but it tells me the blob file is corrupt and it fails to repair it. The log for the repair tool doesn’t appear to say anything too specific about what is failing in the blob db file. Here’s a screenshot of the utility’s output in ssh when i ran it.

For now I just restored the main library.db file and didn’t restore the library.blobs.db file and the server runs, but just appears to be missing a ton of posters.

Anyway, any help with fixing this would be great! I really thought I’d get somewhere with the logs and repair tool, but I’m stumped at this point.

Recap of the main error messages in the Plex Media Server log file:

Exception inside transaction (inside=1) (/home/runner/actions-runner/_work/plex-media-server/plex-media-server/Library/DatabaseMigrations.cpp:342): sqlite3_statement_backend::prepare: duplicate column name: data for SQL: ALTER TABLE ‘media_provider_resources’ ADD ‘data’ blob

Exception thrown during migrations, aborting: sqlite3_statement_backend::prepare: duplicate column name: data for SQL: ALTER TABLE ‘media_provider_resources’ ADD ‘data’ blob

Database corruption: sqlite3_statement_backend::prepare: duplicate column name: data for SQL: ALTER TABLE ‘media_provider_resources’ ADD ‘data’ blob

Error: Unable to set up server: sqlite3_statement_backend::prepare: duplicate column name: data for SQL: ALTER TABLE ‘media_provider_resources’ ADD ‘data’ blob (N4soci10soci_errorE)