I did the base research and asked.

- Cold start = 169 files (before signing in)

- Media Ready = 997 (sign in / claim / all initialization complete)

This is the base structure. It can handle up to the limit of limit of the database file itself which is 140 TB.

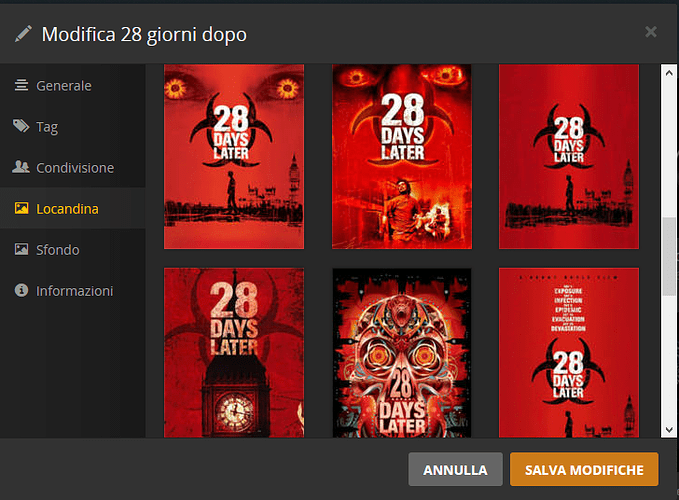

The design decision was whether to put all your posters and subtitles in the database with all the indexing information or leave it out in the file system. As it stands now, you can change poster or subtitles, add or remove, without touching the DB. Anything else would require PMS actually import the item.

My DB currently is 85,915,648 (85 MB). I have 16,149 posters consuming 5.5GB of JPG storage. Add in the posters to the DB and it grows to 5.5GB for the DB. itself.

Try optimizing that database . It won’t happen in real-time.

Using my Samsung SSD (850 EVO), a tar backup of the Library requires 1:45. If this were an optimization, another 1:45 would be required to ‘import’ the data back into the DB file. PMS will have been inaccessible for 3m 30sec.

I don’t even have a big library compared to most. What happens when Chapter index and thumnail images are created? They have to go somewhere.

The files & directory structure needs to be there.

I suggest tune2fs -l /dev/sdxx and look at what the FS is created as.

I tune mine because I know i won’t need all the inodes for files and I still get

Filesystem state: clean

Errors behavior: Continue

Filesystem OS type: Linux

Inode count: 21004288

Block count: 84003328

Reserved block count: 0

Free blocks: 48574048

Free inodes: 20277545

First block: 0

aka

[chuck@lizum plexdir.130]$ df -i /home

Filesystem Inodes IUsed IFree IUse% Mounted on

/dev/sda7 21004288 726976 20277312 4% /home

[chuck@lizum plexdir.131]$ df /home

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda7 330609160 142030916 188561860 43% /home

[chuck@lizum plexdir.132]$

If you want to clean up something excessive, not wasting space on unused inodes is a worthy challenge.

At this point, I really don’t think PMS would consider changing how it structures metadata posters without also performing a complete rewrite from scratch. I just don’t see that happening. It would require exhaustive testing and algorithm proofing on everything all over again. It would be like winding the clock back almost 10 years and throwing it out the window and overhauling the old XBMC storage