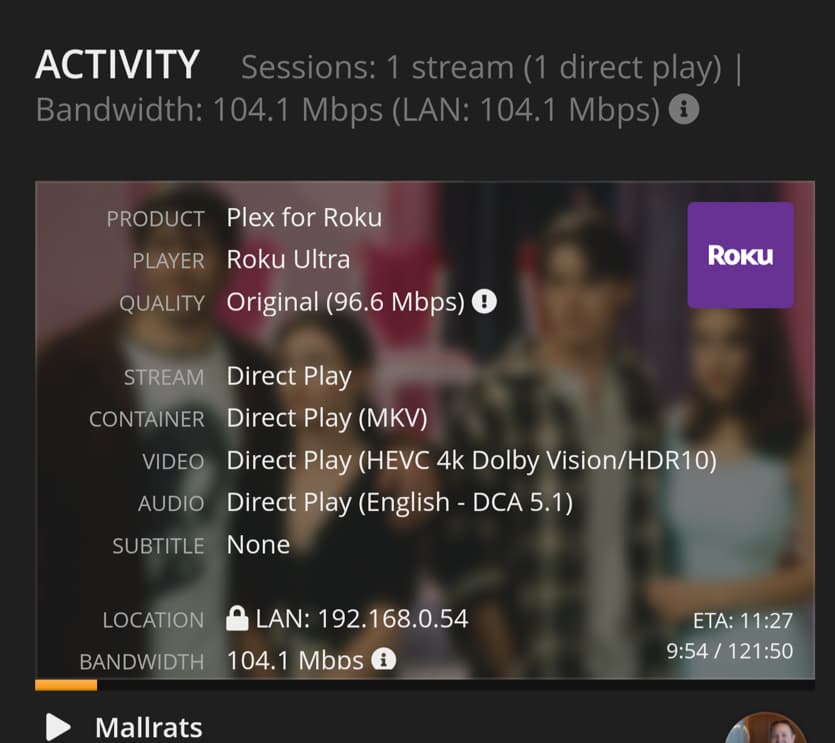

Server Version#:Version 1.32.4.7195

Player Version#: Roku Ultra

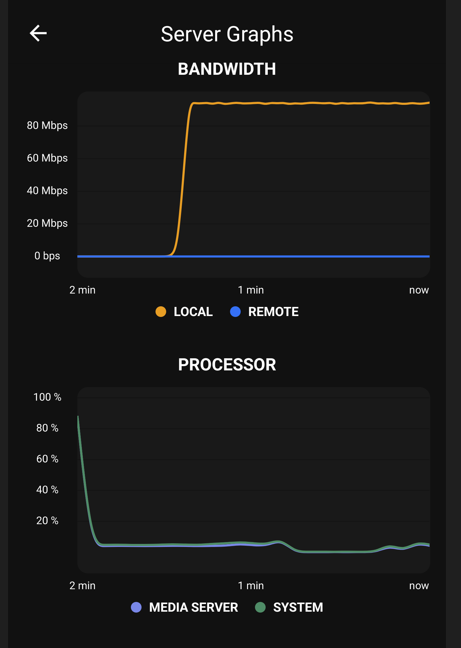

I’m getting a “Not Enough Bandwidth” error when playing a 4k movie off my network. But my server and clients are all hard wired ethernet on a gigabit capable network.

I ran the speed test connection on my Roku Ultra, and its showing 93Mbps, excellent connection. The Roku only has a T-base 100 ethernet port, not gigabit ethernet.

In MediaInfo, the movie shows an average bitrate of 96.6 Mb/s, which I’m guessing may be the issue, possibly flooding the Roku’s ethernet connection, but I thought the Roku might just buffer the extra rather than choking on it.

The error in the logs is “Jul 09, 2023 08:11:08.213 [140704961477432] DEBUG - Failed to stream media, client probably disconnected after 1146880 bytes: 104 - Connection reset by peer”

Log attached. Movie is Mallrats, playing at 8:11am - 8:20am.

Is the best solution to limit the bandwidth on the client side and just let the server transcode down to something the Roku wont choke on? Or switch to wifi, which has 802.11ac and a theoretical 450 Mbps?

Edit/Update: Did some experimenting, and it does seem to be the bandwidth limits of the Roku. I changed the Local Quality to 20Mpbs and the crashes stopped. Turned it back to Original and they resumed. Guess I’ll try the wifi next. Otherwise I’ll add a Shield TV, which has an ethernet connection.

New question, though – when transcoding I noticed two things. A) PMS didn’t transcode HDR to HDR. It transcoded down to SDR. Any way to avoid that? and B) PMS transcoded the audio even though it didn’t need to. In Original, it played the DTS (DCA 5.1) audio track. When transcoding the video, it transcoded the audio to AC3 5.1.

Plex Media Server.zip (815.1 KB)